Ensuring Data Anonymization in Mobile Learning Solutions

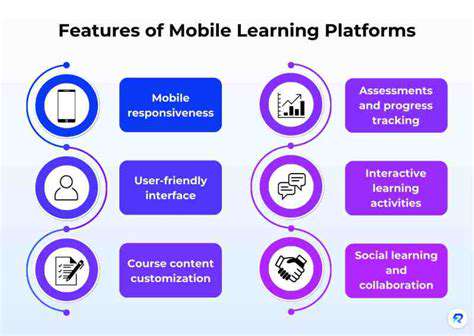

A fundamental principle of data anonymization is minimizing the amount of data collected. Mobile learning platforms often gather extensive user data, including location, usage patterns, and performance metrics. However, many of these details aren't essential for delivering effective learning experiences. Implementing a data minimization strategy requires careful evaluation of what information is truly necessary for the platform's functionality and performance enhancement, without negatively impacting user experience or hindering learning outcomes. This approach reduces both the risk of data breaches and the complexity of data management.

A thorough examination of the platform's core features is vital. Determining the specific data points required for each function, along with potential alternative methods for achieving similar results, represents a critical phase. For example, aggregated location data or time-based proximity information might serve the same purpose as precise location tracking for certain analyses. This method not only protects user privacy but also improves the platform's operational efficiency.

Pseudonymization: Replacing Identifiers with Unique Codes

Pseudonymization substitutes personally identifiable information (PII) with unique, non-identifiable codes. This technique serves as an important middle ground between raw data and fully anonymous data. By exchanging names, email addresses, and other identifiers with pseudonyms, the data remains valuable for analysis while substantially decreasing re-identification risks. Maintaining a robust pseudonymization system that undergoes regular review and updates proves essential for sustaining data security.

The effectiveness of pseudonymization hinges on secure management of the mapping between pseudonyms and original identifiers. Implementing stringent access controls and advanced encryption protocols becomes crucial to prevent unauthorized access and preserve sensitive information confidentiality. Periodic audits and evaluations of the pseudonymization process further strengthen its effectiveness.

Aggregation and Generalization: Summarizing and Rounding Data

Aggregation combines individual data points into summary statistics, such as averages or totals. For instance, rather than recording each student's quiz score, the platform could track average scores for specific lessons or student groups. This technique decreases data granularity, making it more challenging to connect individual users to particular data points. Generalization complements aggregation by rounding or truncating data values, such as recording age ranges instead of exact ages.

Differential Privacy: Adding Random Noise to Data

Differential privacy incorporates carefully measured random noise into datasets. This noise obscures individual data point values, making it difficult to deduce sensitive information about specific users, even when combined with other datasets. Differential privacy provides strong theoretical guarantees of individual user privacy protection, making it particularly valuable for sensitive data like location information or personal preferences.

Applying differential privacy necessitates careful consideration of the specific data involved. The amount of added noise requires precise calibration to balance privacy protection with data utility for analysis. The selection of noise distribution and implementation approach demands careful planning. Despite implementation complexity, the privacy benefits justify the effort, especially for sensitive data types.

Data Security and Access Controls: Protecting Anonymized Data

All previously mentioned anonymization techniques become ineffective without strong data security measures. Implementing strict access controls ensures only authorized personnel can access anonymized data. Robust encryption protocols should protect data both during transmission and storage. Regular security audits and penetration testing help identify and address potential system vulnerabilities.

Clearly defined and enforced data retention policies prevent unnecessary storage of anonymized data. Regular data reviews assess ongoing relevance and security of anonymized information. These procedures help maintain data integrity and confidentiality. Data security and access controls form integral components of effective data anonymization strategies.

Implementing Anonymization in Mobile Application Development

Understanding the Need for Anonymization

Anonymization represents a critical component of data security and privacy, especially in mobile environments. Mobile applications frequently collect vast quantities of user data, including location information, browsing history, and personal preferences. This data, if improperly managed, becomes vulnerable to breaches and misuse. Safeguarding user privacy stands paramount in today's digital ecosystem, with anonymization playing a pivotal role in achieving this objective. Effective anonymization techniques prove essential for protecting sensitive information within mobile applications.

The potential for unauthorized access and data exploitation necessitates proactive privacy measures. Implementing robust anonymization methods helps mitigate risks and preserve user trust. By obscuring or removing identifying information, anonymization reduces re-identification possibilities and maintains user data confidentiality.

Data Sensitivity Analysis

A crucial initial step in anonymization implementation involves conducting comprehensive analysis of data collected by mobile applications. Identifying sensitive data elements proves essential for developing appropriate anonymization strategies. This process requires recognizing potential risks associated with specific data points and evaluating their sensitivity levels.

Careful consideration must be given to data context and potential breach impacts. Certain data types, such as financial information or location data, present higher risks compared to less sensitive data like user preferences. Accurate sensitivity assessments are crucial for tailoring anonymization methods appropriately.

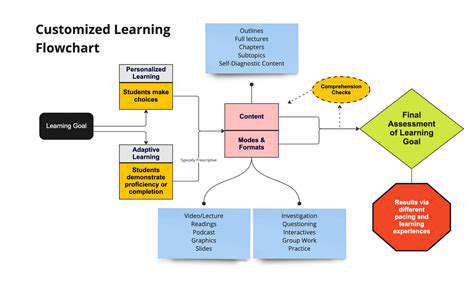

Choosing Appropriate Anonymization Techniques

Numerous anonymization techniques exist, each with distinct advantages and limitations. Methods like data masking, generalization, and aggregation can effectively protect user privacy while preserving data utility. Selecting optimal techniques depends on specific data characteristics and intended use cases. Balancing data utility against privacy considerations requires careful evaluation.

For instance, data masking might involve replacing sensitive information with pseudonyms or placeholders. Generalization could include aggregating data points into broader categories, reducing data precision while retaining overall meaning. These techniques form the foundation of robust anonymization strategies.

Implementing Anonymization in the Application Architecture

Integrating anonymization into mobile application architecture demands careful planning and execution. This process involves modifying data collection, storage, and processing mechanisms to ensure secure handling of sensitive information. Proper anonymization implementation should integrate seamlessly with application core functionality, minimizing user experience disruption.

Developers must consider potential performance impacts of anonymization techniques. Some methods may introduce minor performance overhead. Careful optimization becomes necessary to balance privacy protection with application efficiency.

Testing and Validation

Rigorous testing and validation confirm implemented anonymization effectiveness. This involves simulating various scenarios to evaluate anonymization robustness against potential attacks. Comprehensive testing ensures sensitive data remains protected against re-identification or unauthorized access.

Evaluation metrics should measure anonymization strategy success, potentially including assessments of re-identification difficulty from anonymized data. Understanding anonymization technique effectiveness proves crucial for maintaining user privacy.

Maintaining Anonymization throughout the Application Lifecycle

Anonymization isn't a one-time implementation but an ongoing aspect of mobile application data security. Regular reviews and updates to anonymization procedures address evolving threats and vulnerabilities. Sustaining compliance with privacy regulations and industry standards remains critical for long-term data security. Continuous monitoring ensures anonymization strategies maintain effectiveness over time.

Ongoing evaluation of data collection practices and application of updated anonymization techniques support dynamic approaches to data privacy.

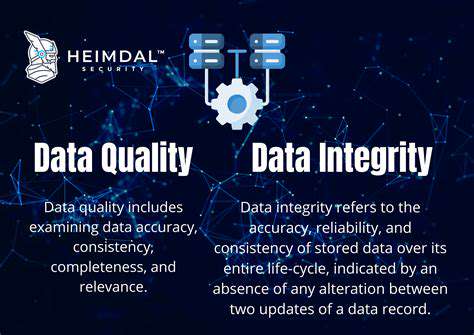

Ensuring Data Integrity and Utility After Anonymization

Data Validation Techniques

Data validation represents a critical process for maintaining data integrity. It involves verifying data compliance with predefined rules and constraints. This process helps identify and correct errors or inconsistencies before data use in analysis or processing. Implementing comprehensive validation rules prevents incorrect data from entering systems. These rules can encompass various aspects including data type, range, format, and application-specific business rules.

Common validation techniques include checking for null values, verifying data types (such as validating date formats), and assessing value ranges. Thorough data validation significantly decreases error propagation risks throughout systems.

Data Backup and Recovery Procedures

Regular data backups are essential for disaster recovery and business continuity. Implementing comprehensive backup and recovery strategies minimizes data loss from unforeseen events like hardware failures or cyberattacks. This process involves creating multiple data copies stored in secure locations. Properly scheduled backups enable efficient data restoration following loss events.

Well-defined recovery plans outline necessary steps for data and system restoration, including backup location identification, restoration procedures, and recovery process testing. A thoroughly tested recovery plan provides critical business resilience following data breaches or natural disasters.

Data Security Measures

Robust security measures are vital for protecting sensitive data. Implementing encryption, access controls, and regular security audits safeguards data against unauthorized access or malicious activities. Protecting confidential information remains a top priority for organizations handling sensitive data.

Implementing strong authentication protocols, multi-factor authentication, and firewalls forms essential components of comprehensive security strategies. Regular security awareness training for employees helps prevent data breaches caused by human error. Continuous monitoring and vigilance are critical for maintaining data security.

Data Auditing and Monitoring

Regular data audits ensure data usage and maintenance comply with established policies and procedures. These audits provide valuable insights into data quality, accuracy, and completeness. Audits can identify inconsistencies or anomalies indicating potential issues. Comprehensive documentation of audit findings supports corrective actions.

Data Governance Policies

Establishing clear data governance policies is crucial for maintaining data quality and integrity. These policies define roles, responsibilities, and procedures for managing data throughout its lifecycle. Policies should address data ownership, access control, and retention periods. This framework ensures regulatory compliance and data consistency.

These policies provide guidelines for responsible data management and help prevent legal issues while maintaining data quality. Well-defined governance structures minimize breach risks and improve overall data management effectiveness.

Data Quality Control

Implementing data quality control measures maintains data accuracy, completeness, and consistency. This involves establishing clear standards and procedures for data entry and validation. Data cleansing and standardization processes eliminate errors and ensure accuracy. Regular quality checks and validation procedures should be implemented.

Data quality control measures ensure data reliability and consistency. These measures contribute to efficient, effective data-driven decision making. Proactive quality control minimizes data errors and enhances overall data reliability.