Addressing Socioeconomic Bias in AI Educational Systems

The Role of Human Oversight and Continuous Improvement

The Importance of Human Judgment

Human oversight plays a crucial role in ensuring the responsible and ethical development and deployment of AI systems. Human expertise allows for a nuanced understanding of complex situations that algorithms might miss, leading to more effective and equitable outcomes. This involves recognizing potential biases in data, evaluating the context of specific situations, and making informed decisions that align with societal values.

The ability to adapt to unforeseen circumstances and make judgments based on incomplete information is a key strength of human oversight. AI systems, while powerful, can struggle in situations requiring creativity, empathy, or common sense, which are hallmarks of human intelligence.

Identifying and Mitigating Bias

One critical aspect of human oversight is the proactive identification and mitigation of bias in AI systems. Human reviewers can meticulously examine training data and algorithms to pinpoint potential sources of bias, such as gender, racial, or socioeconomic disparities. This critical review process can lead to adjustments in the models to ensure they do not perpetuate or amplify existing inequalities.

This process also necessitates a thorough understanding of the potential societal impacts of AI systems. By considering the diverse perspectives of different groups, human oversight can ensure that AI systems are fair and equitable for everyone.

Ensuring Transparency and Explainability

Transparency and explainability are paramount in building trust and accountability. Human oversight can help ensure that AI systems are designed and operated in a way that allows for clear understanding of their decision-making processes. This transparency can be achieved through the development of clear documentation, visualizations, and explanations that help users comprehend how the AI system arrived at a particular conclusion.

This approach enables users to better understand the strengths and limitations of the AI system, leading to better decision-making.

Safeguarding Against Malicious Use

Human oversight is essential for safeguarding against the potential misuse of AI systems. Human experts can evaluate the potential risks associated with different applications of AI, considering scenarios where the technology could be exploited for malicious purposes, such as in the development of autonomous weapons systems. This proactive evaluation helps to identify vulnerabilities and implement appropriate safeguards to prevent such misuse.

Maintaining Ethical Frameworks and Standards

Establishing and maintaining ethical frameworks and standards is a crucial function of human oversight. This involves developing clear guidelines for the development, deployment, and use of AI systems that align with societal values and ethical principles. These principles should be reviewed regularly to ensure they remain relevant and effective in addressing emerging challenges.

These standards must evolve in tandem with the rapid advancements in AI technology, ensuring that ethical considerations are central to all stages of the AI lifecycle.

Evaluating Performance and Adapting to Change

Human oversight is critical for evaluating the performance of AI systems and adapting them to changing circumstances. Human experts can monitor the effectiveness of the AI systems in real-world applications, identifying areas where the system may be faltering or needing adjustments. This ongoing evaluation and feedback loop ensure that the AI systems remain relevant and effective.

Regular assessments of the system's performance enable the identification of biases, limitations, and areas requiring modification to ensure the continued reliability and trustworthiness of the system.

Promoting Human-AI Collaboration

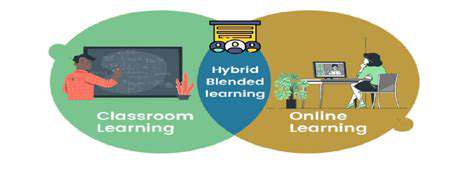

Human oversight fosters a collaborative relationship between humans and AI systems. Instead of viewing AI as a replacement for human judgment, human oversight encourages collaboration where humans use AI as a tool to augment their capabilities. This collaborative approach leverages the strengths of both humans and AI to achieve more impactful outcomes.

Ultimately, human oversight ensures that AI systems are developed and deployed in a way that benefits society as a whole, rather than exacerbating existing inequalities or creating new problems.

Read more about Addressing Socioeconomic Bias in AI Educational Systems

Hot Recommendations

- The Gamified Parent Teacher Conference: Engaging Stakeholders

- Gamification in Education: Making Learning Irresistibly Fun

- The Future of School Libraries: AI for Personalized Recommendations

- EdTech and the Future of Creative Industries

- Empowering Student Choice: The Core of Personalized Learning

- Building Community in a Hybrid Learning Setting

- VR for Special Education: Tailored Immersive Experiences

- Measuring the True Value of EdTech: Beyond Adoption Rates

- Addressing Digital Divide in AI Educational Access

- Preparing the Workforce for AI Integration in Their Careers