Addressing Algorithmic Bias in Educational AI

Identifying and Quantifying Bias in Educational AI Models

Understanding the Sources of Bias

Educational AI models, while promising, can perpetuate and amplify existing societal biases. These biases can stem from various sources, including the data used to train the models. If the training data reflects historical inequalities or societal prejudices, the model will likely learn and replicate these biases, potentially leading to unfair or discriminatory outcomes for certain student groups. This is a critical concern, as these biases can significantly impact a student's learning trajectory and future opportunities.

Data Collection and Representation

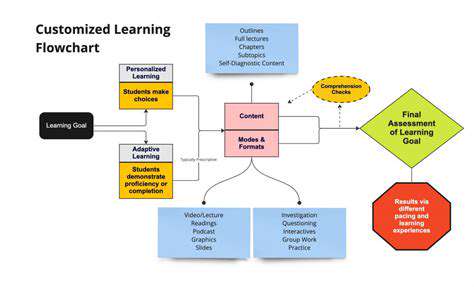

The quality and representation of data used to train AI models are paramount. Insufficient representation of diverse student populations in the training data can lead to inaccurate or incomplete learning about different learning styles, needs, and backgrounds. For example, if the data predominantly reflects the experiences of students from a specific socioeconomic background, the model may struggle to effectively support students from other backgrounds, potentially leading to underperformance and unequal access to educational resources.

Algorithmic Design and Implementation

The algorithms themselves can also introduce bias. Complex algorithms, while powerful, can sometimes contain implicit biases. These biases might not be immediately apparent in the design, but they can manifest in the model's predictions or recommendations. Careful design and rigorous testing are crucial to identify and mitigate these biases, ensuring that the models fairly assess and support all students.

Evaluation Metrics and Assessment

Effective evaluation metrics are essential to identify and quantify biases in educational AI models. Traditional evaluation metrics might not adequately capture the potential for bias. For example, focusing solely on overall accuracy might mask disparities in performance across different student groups. New and more nuanced metrics are needed that specifically address fairness and equity in educational outcomes.

Impact on Student Outcomes

Bias in educational AI models can have a significant and detrimental impact on student outcomes. Students from marginalized groups might face disproportionately negative consequences, including lower grades, fewer opportunities for advanced coursework, and limited access to personalized learning support. This can exacerbate existing inequalities and hinder the potential of these students to reach their full academic potential.

Mitigating Bias Through Ethical Considerations

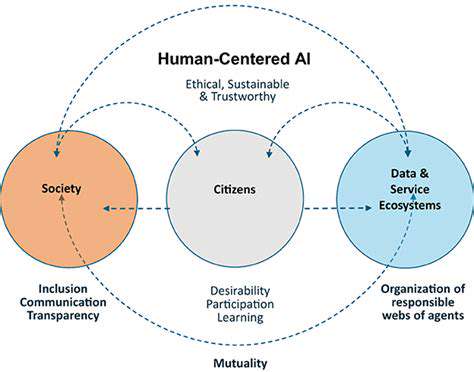

Addressing bias in educational AI models requires a proactive and ethical approach. This includes careful data curation, the implementation of fairness-aware algorithms, and the use of transparent evaluation metrics. Furthermore, ongoing monitoring and evaluation of model performance are crucial to identify and rectify any emerging biases. It is essential to incorporate diverse perspectives and expertise throughout the design and implementation process to ensure equitable outcomes.

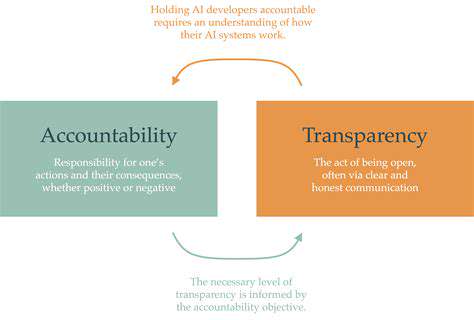

Transparency and Accountability in Model Development

Transparency and accountability are vital components of responsible AI development. Understanding how AI models arrive at their conclusions is crucial for identifying and mitigating bias. Developing models with explainable AI (XAI) capabilities can help stakeholders understand the reasoning behind the model's predictions, enabling a more thorough analysis of potential biases. This transparency fosters trust and accountability, crucial for building equitable and effective educational systems.

Developing Bias Mitigation Strategies for AI in Education

Defining Bias in AI Educational Systems

AI systems in education, like any technology, can inherit biases from the data they are trained on. These biases can manifest in various ways, from subtly skewing assessment scores to perpetuating stereotypes in learning recommendations. Identifying the specific types of bias, whether stemming from historical data inaccuracies or implicit societal prejudices, is crucial for developing effective mitigation strategies. Understanding the potential for algorithmic bias to influence student outcomes, particularly for marginalized groups, is paramount in ensuring equitable educational opportunities.

Bias in AI systems can lead to unfair or discriminatory outcomes, potentially reinforcing existing inequalities. Careful examination of the data sources and algorithms used to train these systems is essential to identify and address these biases before they impact student experiences and educational trajectories. This includes analyzing the representation of different demographic groups in the training data and assessing the potential for algorithmic bias to disproportionately affect certain student populations.

Data Collection and Preprocessing for Bias Reduction

A critical first step in mitigating bias is ensuring the data used to train AI systems in education is representative and unbiased. This involves carefully selecting and curating data sources, actively seeking diversity in student demographics, and addressing potential biases within existing datasets. Furthermore, preprocessing techniques can help to reduce the impact of existing biases by removing or re-weighting data points that demonstrate problematic or skewed patterns.

Careful consideration must be given to the data collection process itself. Ensuring that data is collected ethically and with appropriate consent is paramount. Data quality and completeness are also vital; incomplete or inconsistent data can lead to flawed training data and amplified bias.

Algorithmic Design for Fairness and Equity

The design of algorithms used for AI in education is critical to mitigating bias. Developers should prioritize fairness and equity in the algorithms' design principles, considering various factors that might lead to discriminatory outcomes. This includes using fairness-aware algorithms and metrics to evaluate and adjust model outputs to ensure more equitable outcomes for all students.

Techniques like adversarial debiasing and fairness constraints can be incorporated to directly address potential biases within the algorithms. Transparency in the decision-making processes of these algorithms is also essential to build trust and accountability within the educational system.

Human Oversight and Intervention in AI Systems

Implementing human oversight and intervention mechanisms is crucial to effectively manage and control the potential for bias in AI systems. Educators and administrators should be involved in monitoring the performance of AI systems, identifying areas where bias might manifest, and intervening to address these issues. This process necessitates establishing clear guidelines and protocols for human intervention, ensuring consistent and fair application.

Evaluating and Monitoring AI Systems for Bias

Continuous evaluation and monitoring of AI systems are essential to detect and address bias as it evolves. Regular assessments should be conducted to assess the system's performance across different demographic groups and identify potential biases in recommendations, assessments, or learning pathways. This includes establishing benchmarks for fairness and equity and utilizing data analysis techniques to identify patterns that indicate bias.

Regular audits of AI systems and their outputs are vital to ensure that they are performing as intended and not perpetuating bias. These audits should consider the potential impact on various student populations and strive to create an equitable learning environment for all.

Promoting Collaboration and Dialogue for Ethical AI

Addressing bias in AI systems requires a collaborative effort involving researchers, educators, policymakers, and the wider community. Promoting dialogue and knowledge sharing between these diverse stakeholders is critical to developing effective strategies for mitigating bias. Creating platforms for open discussion and collaboration will help ensure that ethical considerations are central to the design, implementation, and evaluation of AI in education.

Establishing clear ethical guidelines and best practices for the development and deployment of AI systems in education is essential. These guidelines should be regularly reviewed and updated to reflect evolving understandings of bias and emerging technologies.

Read more about Addressing Algorithmic Bias in Educational AI

Hot Recommendations

- The Gamified Parent Teacher Conference: Engaging Stakeholders

- Gamification in Education: Making Learning Irresistibly Fun

- The Future of School Libraries: AI for Personalized Recommendations

- EdTech and the Future of Creative Industries

- Empowering Student Choice: The Core of Personalized Learning

- Building Community in a Hybrid Learning Setting

- VR for Special Education: Tailored Immersive Experiences

- Measuring the True Value of EdTech: Beyond Adoption Rates

- Addressing Digital Divide in AI Educational Access

- Preparing the Workforce for AI Integration in Their Careers